Bark, woof, ruff: UTA scientist aims to decode dog ‘speech’

What if the next time you asked your dog “Who’s a good boy?” you could understand Fido’s response?

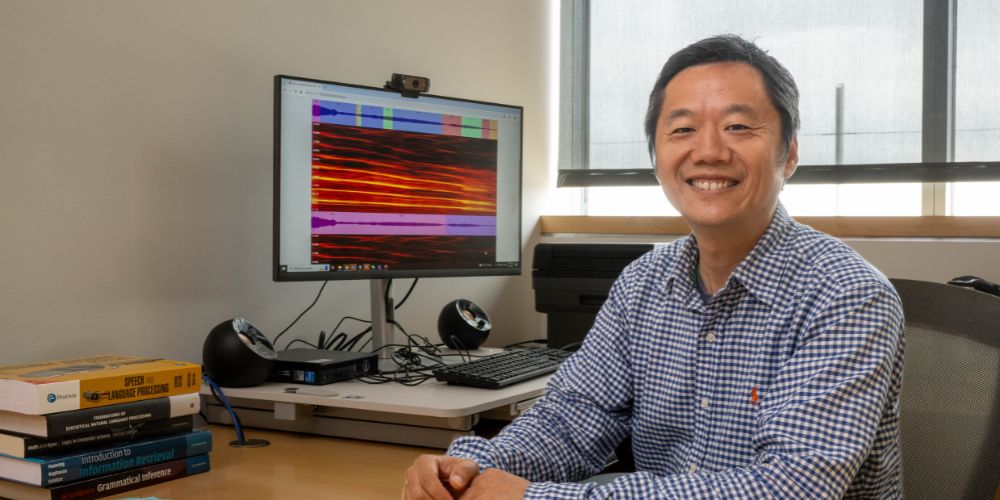

Kenny Zhu, a professor of computer science and engineering at The University of Texas at Arlington, plans to use machine learning to translate dogs sounds into phonetic representations and, eventually, words. A National Science Foundation (NSF) Research Experiences for Undergraduates site grant is funding his research with a three-year, $483,804 award.

“Scientists have been trying to decode the noises of whales, dolphins and chimpanzees for many years, but most of that research has been conducted by biologists or ecologists with little computer science knowledge,” Zhu said. “That’s changing, and now the use of machine learning has been introduced to analyze data.

“My research is in natural language processing for humans, so why not look at how animals communicate?”

To collect the sounds, Zhu downloads videos from YouTube and other sources and strips away all other noise in the audio file except for the sound of the bark. He uses machine learning to catalog the sounds, segments them into pieces like syllables and assigns each syllable a symbol similar to the alphabet.

To date, he has transcribed about 10 hours of barks into syllables.

“We are also trying to capture the things around the dog and the activity the dog is performing at the time to roughly guess what the dog is communicating and give context to the syllables,” Zhu said.

Early indications are that dogs from different parts of the world vocalize differently. He looked at videos of Shiba Inu dogs in the United States and Japan and noticed that their vocalizations were not the same. The Japanese dogs tend to “speak” faster and in a higher-pitched tone than their American counterparts.

The NSF’s Research Experiences for Undergraduates program supports active research participation by undergraduate students. This project will involve students from the Computer Science and Engineering Department and those who study animal behavior in biology and other disciplines. The students will aid in data collection by submitting videos of dogs and other animals, and they will also help to run the sounds through the transcription pipeline to create a catalog of different sounds and species.

“Machine learning plays a role in everything we’re doing in this project, so it’s a great opportunity for students to learn about big data analysis and explore how machine learning can be applied in areas that it hasn’t been before,” Zhu said.

- Written by Jeremy Agor, College of Engineering